What is certified robustness?

Unlike empirical robustness, which focuses on improving model performance on adversarial examples, certified robustness provides provable guarantees that a model will maintain its performance within a specified perturbation radius. This means that even under adversarial attacks, the model's predictions remain stable and reliable.

Our Contributions

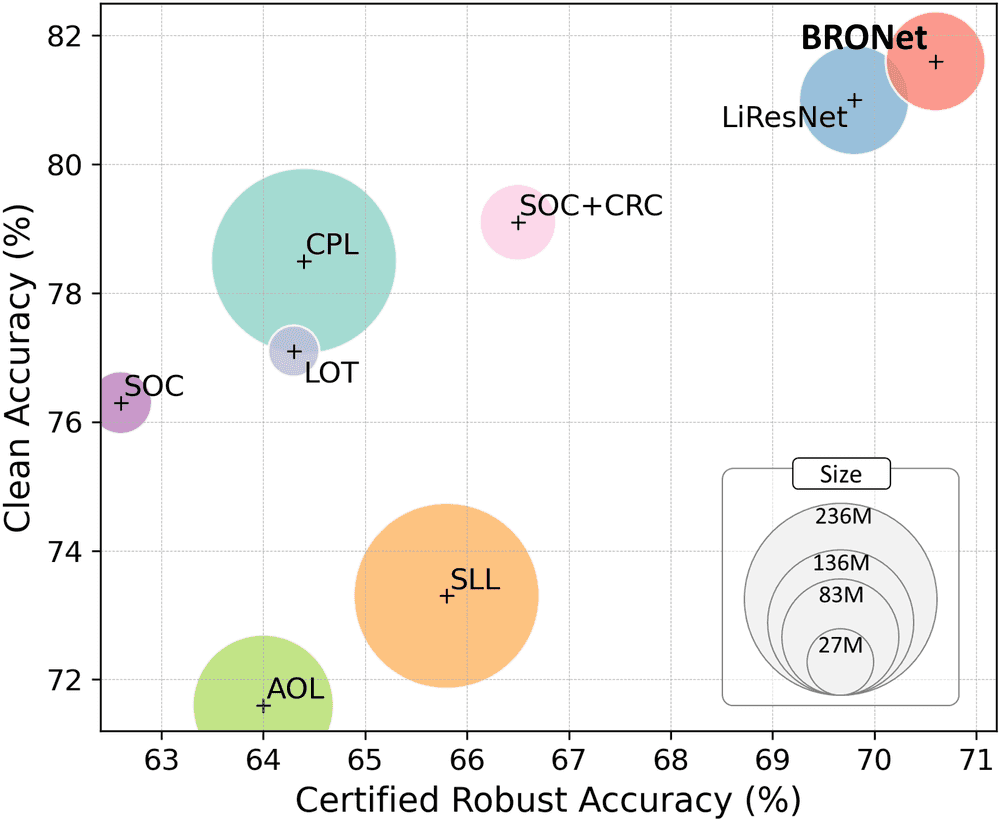

We introduce two core innovations to build more effective certified-robust networks:

- Block Reflector Orthogonal Layer (BRO): A low-rank, approximation-free orthogonal convolutional layer designed to efficiently construct Lipschitz neural networks, improving both stability and expressiveness.

- Logit Annealing Loss (LA): An adaptive loss function that anneals margin allocation across training samples, dynamically balancing classification margins to improve certified robustness

Citation

@inproceedings{lai2025enhancing,

title={Enhancing Certified Robustness via Block Reflector Orthogonal Layers and Logit Annealing Loss},

author={Bo-Han Lai and Pin-Han Huang and Bo-Han Kung and Shang-Tse Chen},

booktitle={International Conference on Machine Learning (ICML)},

year={2025},

note={Spotlight}

}